About

Let me introduce myself.

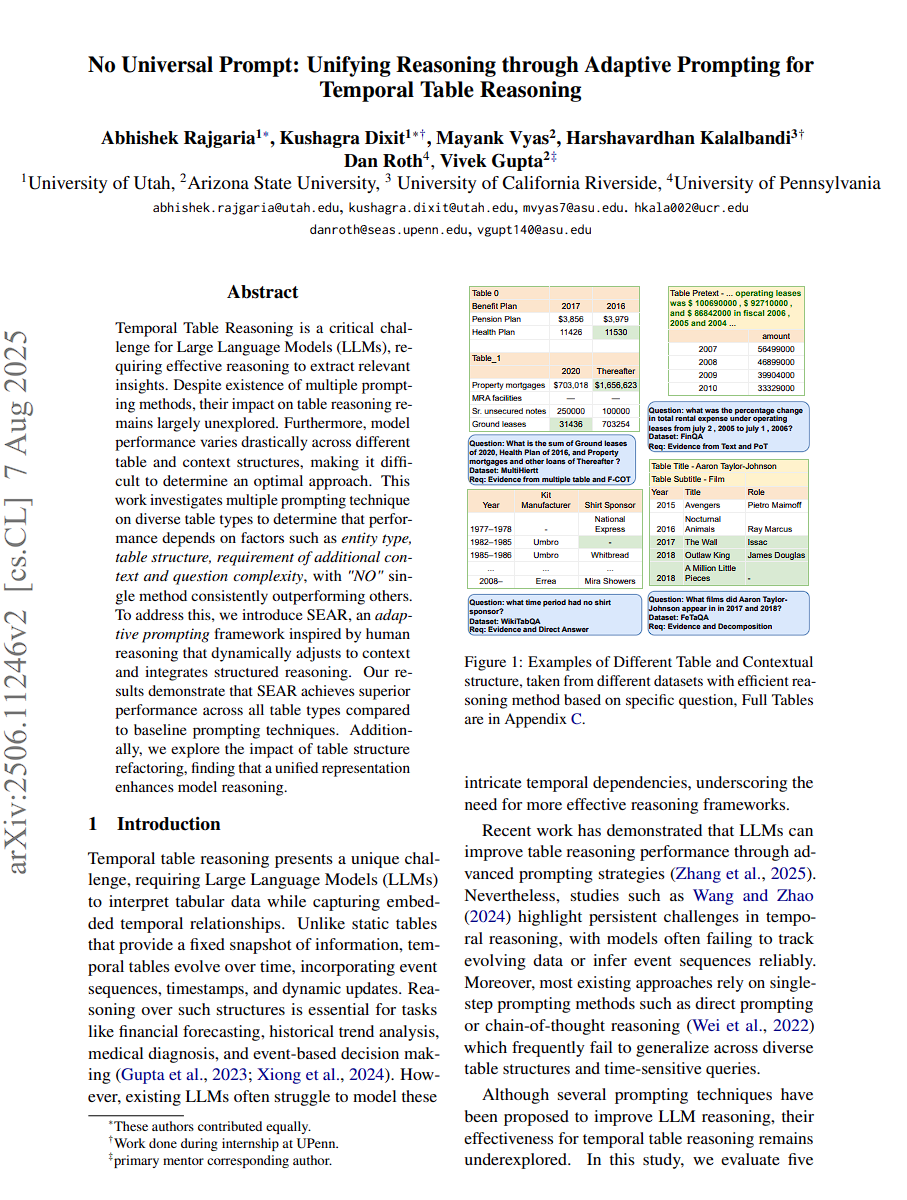

I am a dedicated computer science enthusiast graduated with a Master's in Computer Science at the University of Utah, having previously earned a Bachelor's degree from Netaji Subhas Institute of Technology(NSIT), Delhi. My passion lies at the intersection of software development and AI, and I am eager to contribute my skills as both a Software Development Engineer and AI/ML Engineer. With a solid foundation in programming languages such as Java, Python, C++, and Typescript, along with proficiency in frameworks like PyTorch, LangChain and LangGraph. I am excited to bring my adaptable mindset and proactive problem-solving approach to dynamic teams working on cutting-edge technologies.

Profile

Originally from India, I'm now navigating life in the USA, driven by a passion for computer science. Outside of my academic pursuits, I enjoy playing cricket and table tennis, and I find immense joy in exploring hiking trails.

- Fullname: Kushagra Dixit

- Birth Date: March 30, 1999

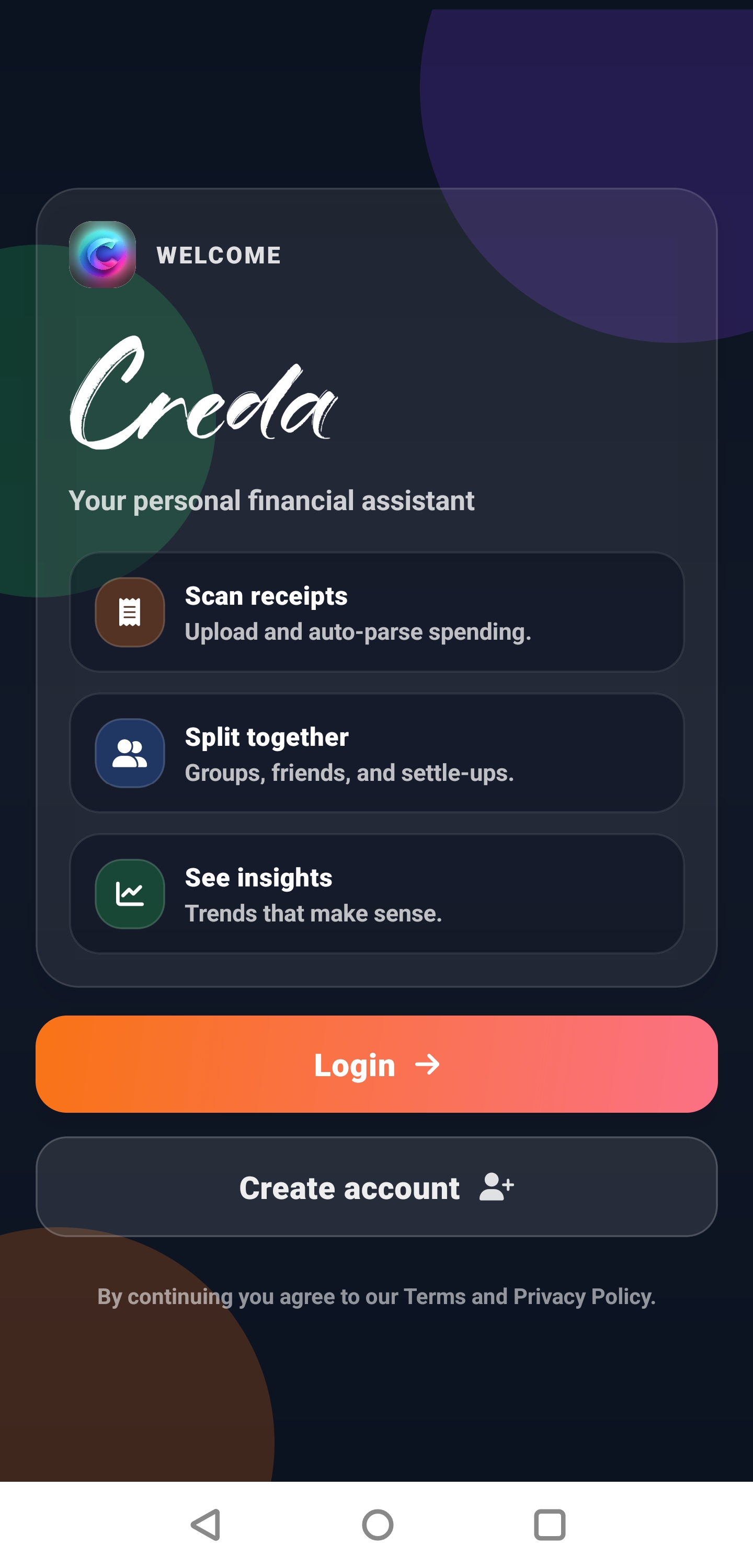

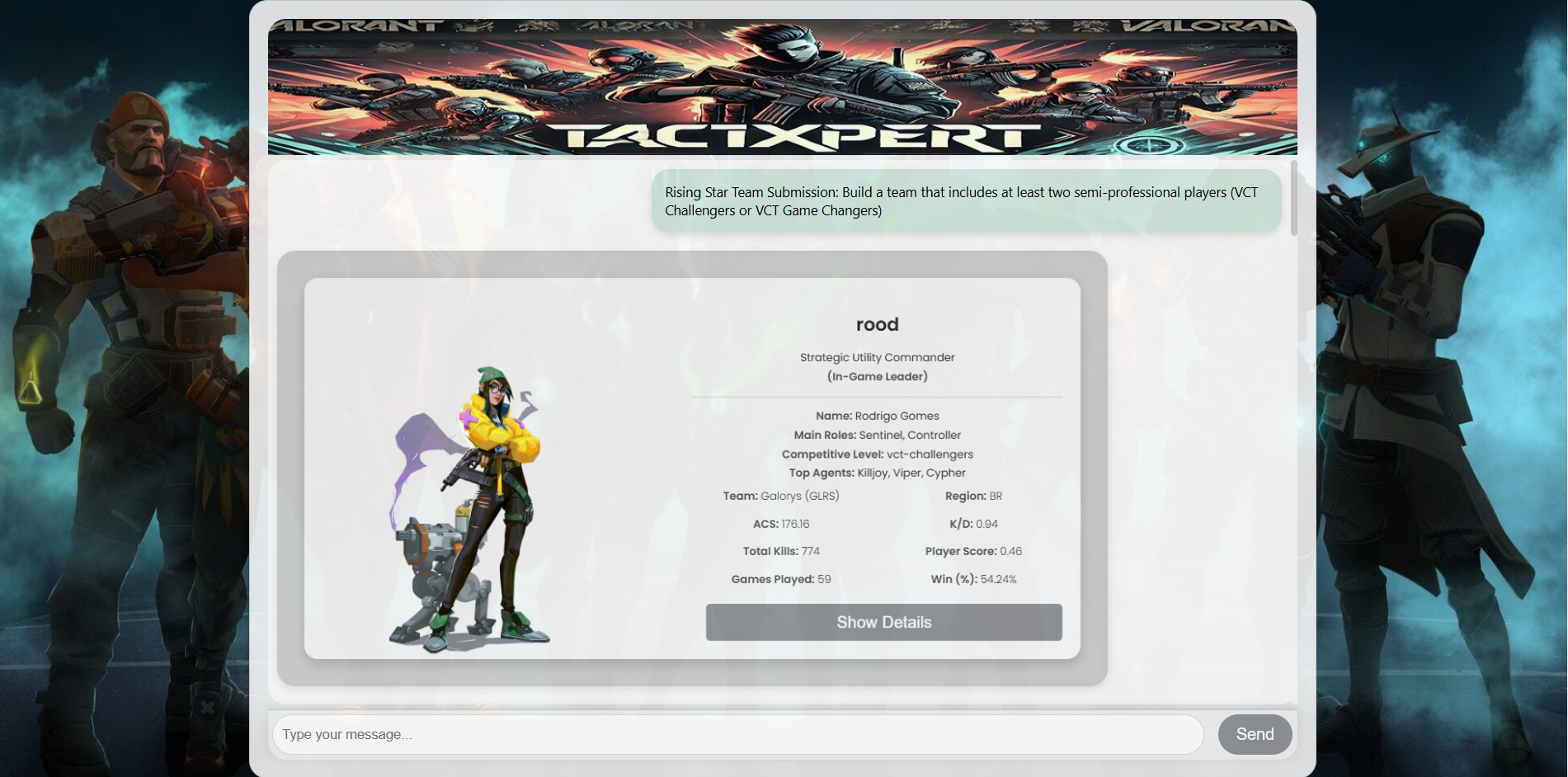

- Job: Research and Development Engineer, Software Development Engineer, Research Programmer

- Email: kushagradixit1[at]gmail[dot]com

Skills

I bring proficiency in programming languages such as Java, Python, C++ and Typescript. Additionally, I possess expertise in machine learning frameworks, including PyTorch, LangChain, LangGraph. My skill set extends to technologies like Spring-Boot, RESTful APIs, AWS, and MongoDB, showcasing versatility in varied technical environments. With a proven ability to innovate and adapt, I am well-equipped to tackle diverse challenges in the realms of software development and machine learning.

- Programming Languages: C++, Java, Python, Typescript, PHP.

- Technologies and Frameworks: Spring-Boot, Microservice Architecture, RESTful APIs, PostgreSQL, AWS, GCP, MongoDB, Git, Apache Kafka, Kubernetes, GDB, Unix.

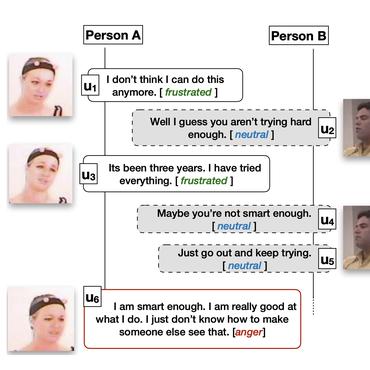

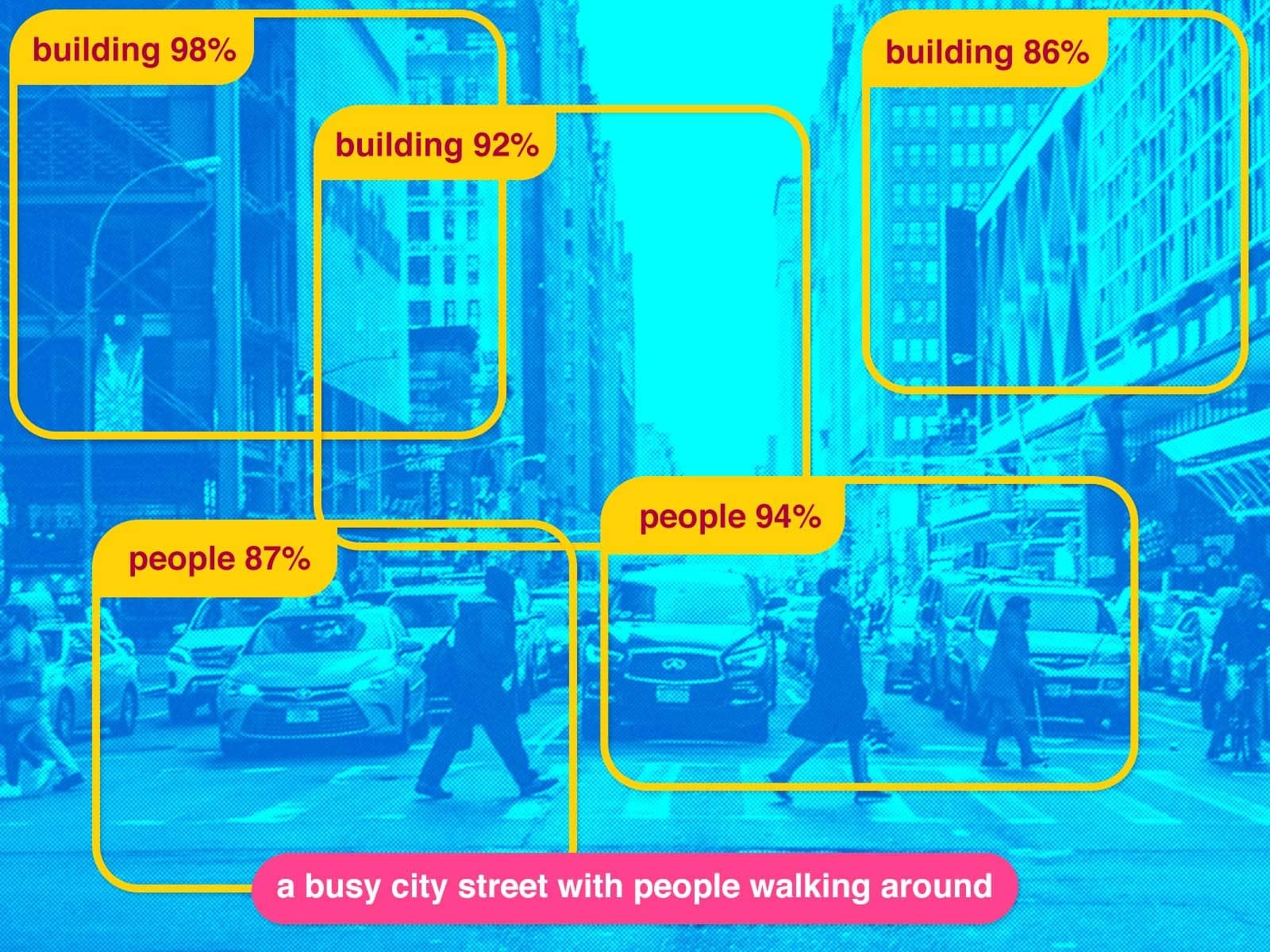

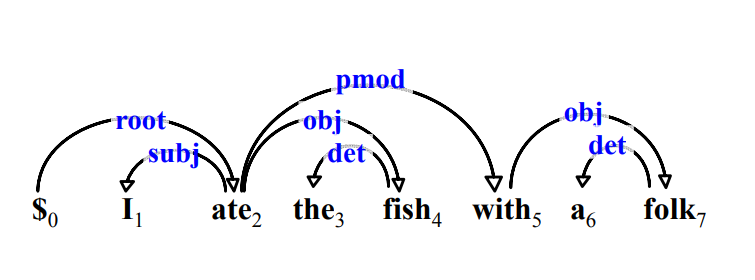

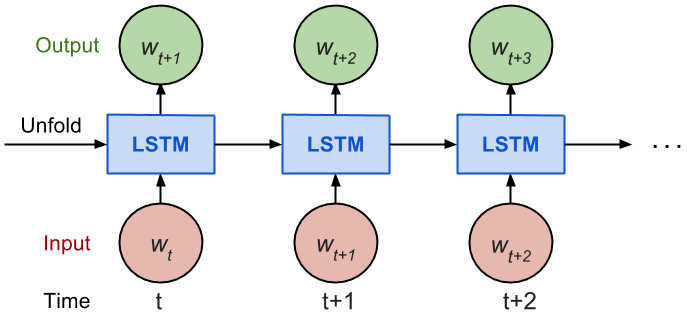

- Machine Learning: Pytorch, Keras, Tensorflow, Scikit-Learn, NumPy, Pandas, Deep Learning, Transformers (Hugging Face), Word embeddings (Word2Vec, GloVe, BERT), Large Language Models, LangChain, LangGraph.